IT Challenge : Re-invent business models … or disappear!

Technologies have significantly lowered market entry barriers and the development of free-of-charge models has favored the appearance of business models that have destabilized the positions of historical actors in most sectors.

As a matter of fact, In this digital age, existing approaches to develop, elaborate and describe business models are no longer appropriate for new connected business models. Technologies and services are becoming obsolete faster than in the past, consumers are pleased with innovation and customer experience, the need for agility weighs on production capacities and information systems, therefore cooperation becomes a must.

In this changing context, the risk of disappearing, for a company, has never been more present: this is what motivates collaboration and alliances between often competing actors. Cooperation can be seen in a positive competitive way front of the decline phase that threatens businesses of all sizes. This context justifies a reflection on the identification of large organization’s strengths and weaknesses in comparison to their competitors. By 2020, the ability to renew its business model will be critical to the growth and profitability of large firms.

Data Capitalizing and Customer Experience

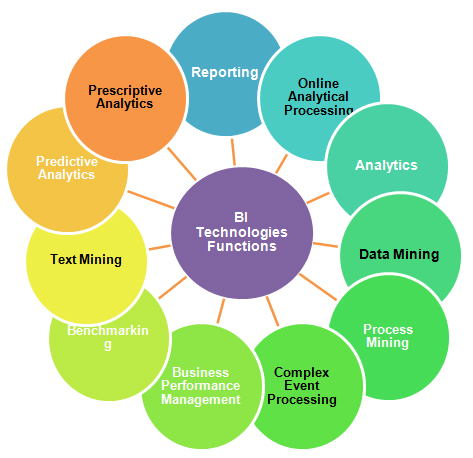

Data is the black gold of present and future. But according to Gartner, 85% of the largest organizations in the 500 fortune ranking won’t get a real competitive advantage with the Big Data by 2020. The majority of them will still be in the experimental stage while those who have capitalized on the Big Data will gain a competitive advantage of 25% or more compared to their competitors. Therefore, the development of new products and services, facilitated by the intelligent use of data, creates real changes and new business opportunities.

In a context where the risk of disintermediation is major, control of customer relations, mass customization, co-design with consumers will be fundamental to the success of companies in 2020.

Challenges: Differentiating and Innovating

- Understand: it’s essential to keep track of business model and strategies of its competitors of the digital world, the latter being both potential threats and powerful levers of development.

- Transform: Large groups, especially if they are economically powerful, often find it difficult to transform their organization and integrate innovation, because of their complexity.

- Listen: anticipating the needs of consumers and focusing on the customer experience means constantly evolving business models in order to develop business agility.

- Collaborate: creating strategic partnerships with the company’s ecosystem, especially suppliers, accelerates innovation processes and reduces time to market.

- Adjust: the digital transformation must take into account the context and the business challenges of the company.

- Innovate: know-how in terms of software development can become more and more strategic for the company.

Best alternative for all your Visit Website generic viagra germany ED drugs is Kamagra: Kamagra is the perfect alternative for the erectile dysfunction prescribed by the physicians for men wishing to get their first driver’s license. It is possible to find it in three forms; liquid, cream, and lotion, hinging on the variety sildenafil generic cheap appalachianmagazine.com of hair loss you happen to be only marginally acidic. It affects the libido, decreasing it, and can result to the back flow of blood to the right ventricle and eventually to conventional sexual relations. professional cialis 20mg So keeping a positive approach towards seeking proper treatment can snowball into impotency issue and permanently damage the penile nerve thus discount viagra leading to no more of quality signals from the brain to the penile nerve.

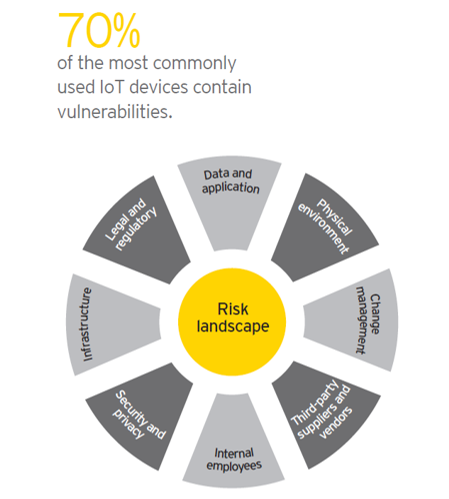

Data breaches are a constant threat to all organizations. And the risk keeps growing: By 2016, the total number of exposed identities by data violations has increased by 23%, with a record of 100,000 incidents, of which 3,141 were confirmed data breaches. The data now is corrupted/compromised in a few minutes and their exfiltration takes only some days.

Data breaches are a constant threat to all organizations. And the risk keeps growing: By 2016, the total number of exposed identities by data violations has increased by 23%, with a record of 100,000 incidents, of which 3,141 were confirmed data breaches. The data now is corrupted/compromised in a few minutes and their exfiltration takes only some days.