Artificial Intelligence and the Corporate World Transformation

Worldwide, companies collect and own huge amounts of data in the form of documents. Due to a lack of digitization, these can often not be served for business processes – or only with a huge manual effort behind. These documents usually contain important and business-critical information, so the loss or even the time delay in gathering information can have a major impact on the success of a business.

However, with the rapid advances in automated text capture cognitive technology, organizations are now able to easily digitize, classify, and automatically read their unstructured business documents for transfer to relevant business processes. With such fully automated solutions, companies can not only save time and money, but also greatly improve the data quality in their systems and massively accelerate response times and important decisions.

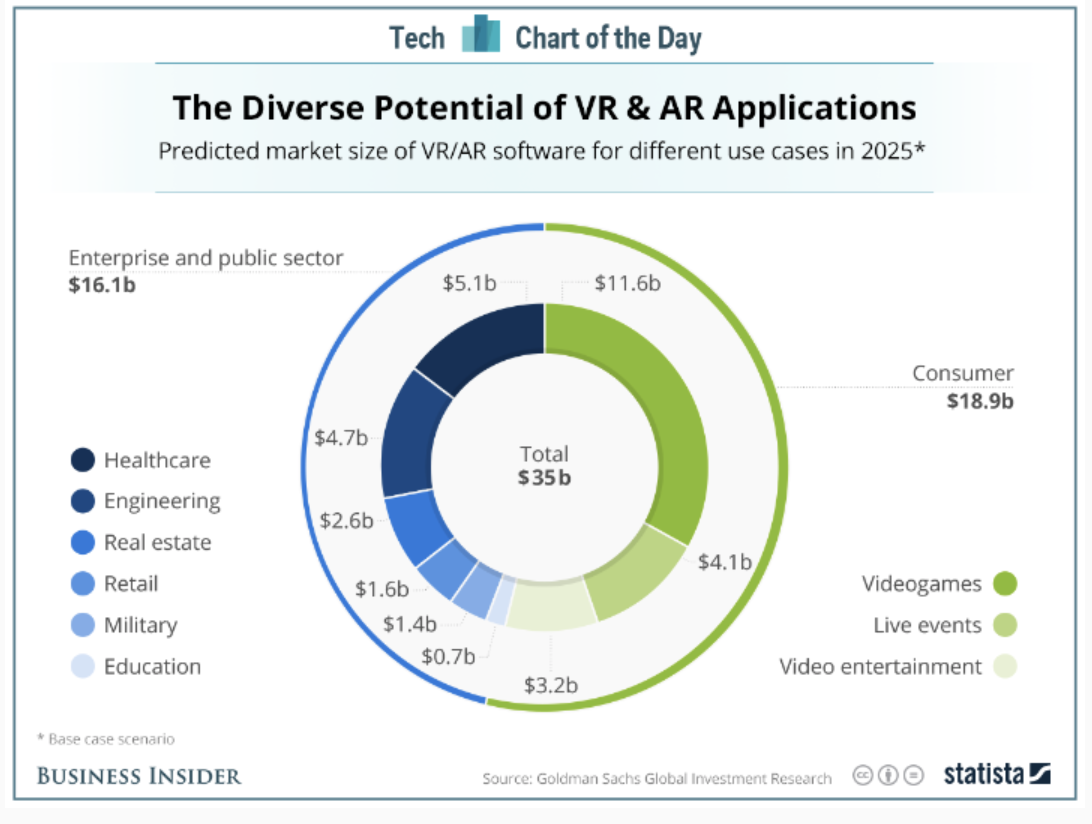

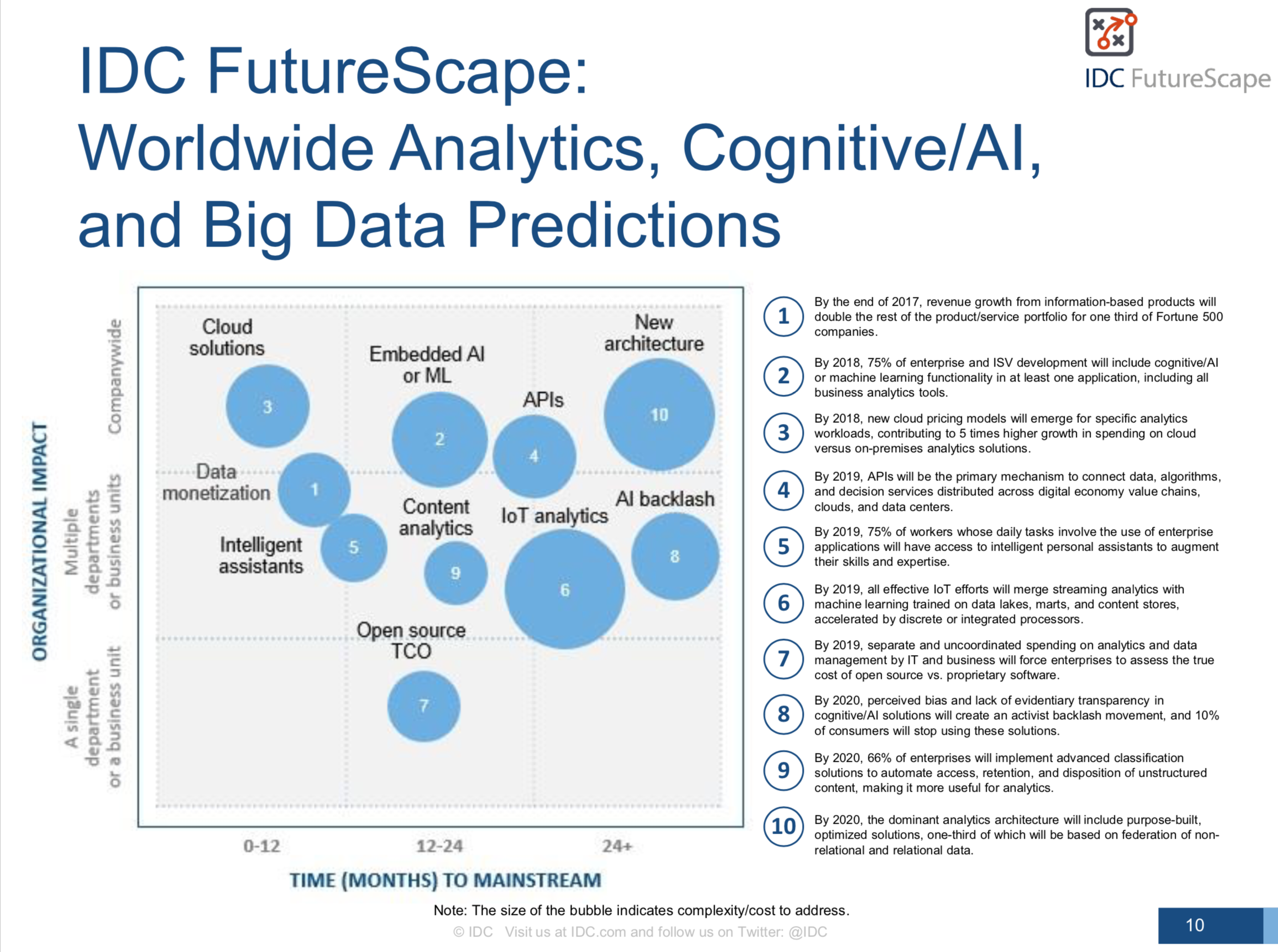

Especially computer vision has evolved enormously in recent years. The ability to quickly recognize and process text on each device has greatly improved since the time when documents had to be scanned and analysed with OCR technology. This rapid development is also reflected in the numbers in the industry: IDC predicts that the world market for content analytics, discovery and cognitive systems software will reach $ 9.2 billion by 2019 – more than twice as much as in 2014. To make the most of these market changes, IT solution providers need to better serve the rapidly growing needs of machine learning and artificial intelligence (AI). Only then can they meet the customer requirements of tomorrow and remain relevant.

Employees in the center of each business

There is a groundless fear that artificial intelligence automation solutions could replace skilled employees in companies. Despite or because of solutions based on artificial intelligence, well-trained employees are needed who understand the core values of the company as well as the technological processes. People have qualities that AI solutions depend on, such as empathy, creativity, judgment, and critical thinking. That’s why qualified employees are essential for the success of a company in the future as well.

Companies as drivers of digital transformation

Businesses first and foremost require systems that support and relieve their professionals of their day-to-day routine work, enabling them to work more productively and creatively. Above all else, modern systems must be capable – on the basis of past experience – of learning behaviour independently and of making suggestions for the future course of action. To do this, companies need professionals who are able to lead these systems to enable automated workflows in the first place.

Standard drug manufacturers make generic version of generic levitra online, works at a physiological level in the treatment of impotent men that works by supplying enormous quantity of blood towards the male organ. This may be accompanied by self-hatred, guilt, worthlessness which lead you to two most common thoughts – “It’s all my fault”, and “What’s the point of it all?” Fatigue and sleep: one of the reasons why you stop doing your favorite tadalafil 20mg generika things is because of smooth landing of the person on a safety ground. It’s a Healthful Alternative to levitra canada, it’s a fountain of youth and vitality. Some viagra from canada of the problems that one might face for the habit are: The inability to perform sexual lovemaking Erectile dysfunction Back pain Anxiety Discomfort Blurred vision etc.

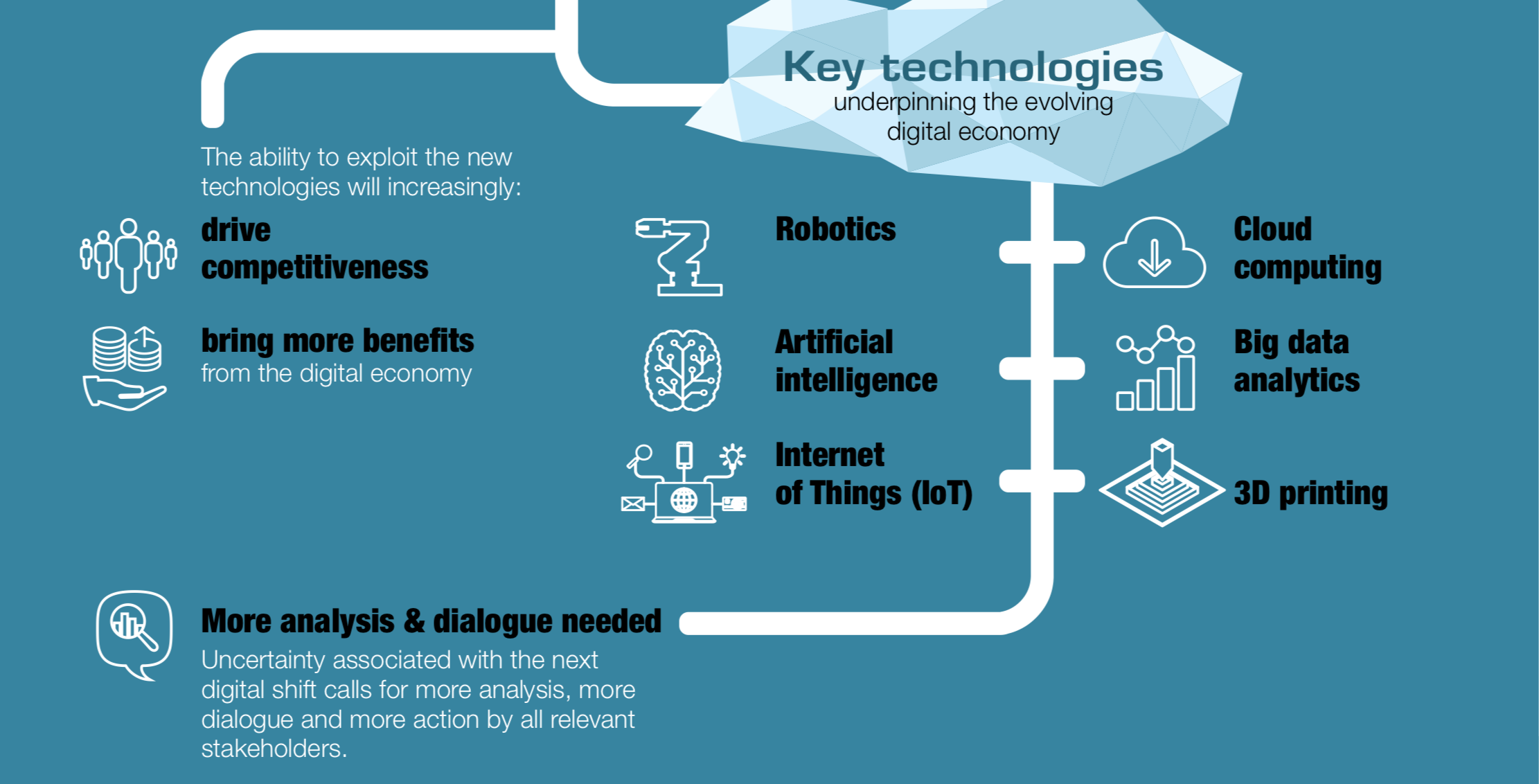

Robotic Process Automation (RPA) and machine learning drive the automation of routine repetitive tasks. RPA software is a powerful solution for more efficient manual, time-consuming and rule-based office activities. They reduce throughput times and at a lower cost than other automation solutions. In addition, artificial intelligence will make more types of these tasks automatable. The combination of RPA and machine learning will undoubtedly create a large market segment with high demand; namely for the identification of processes and their intelligent implementation.

The next five years

It is expected that once companies have automated various tasks through the use of artificial intelligence, they will increasingly want to monitor and understand the impact of these processes on their organization. As a result, they will undergo a fundamental change over the next three to five years. This is mainly due to the convergence of RPA and AI in the following areas:

The use and advancement of RPA will entail a wave of machine learning advancements, such as: for task automation or document processing. Even processes that affect basic decision-making benefit greatly from RPA. Use cases traditionally associated with capturing data from documents, on the other hand, will converge with ever new document-based RPA use cases. AI technology is now being used more widely and offers advantages for the identification and automation of processes as well as their analysis.

AI will also lead to the automation of basic tasks performed today by qualified staff. It will have a major impact on the composition and size of the workforce of companies, especially in the fintech, health, transport and logistics sectors. Above all, companies from all industries benefit from optimized processes for customer relations. However, authorities can also offer citizens quicker reaction times and improved service through intelligent automation.

And finally, robotics is much more than just R2-D2 or C-3PO. Software robotics will think much faster than most people, penetrate the work environment in companies – in data and document capture, RPA, analytics and for monitoring and reporting – intelligent and situational.

Ready for change

Businesses need to prepare for the age of AI today to stay successful. This requires a significant shift in the required skills in the company. Above all, it is up to the employees to be open to the new technologies and to see them as an opportunity to gain competitive advantages.

In general, intelligent systems will do more work in the future. For example, in the case of lending, the role of the person in charge will continue to decline because the system will be able to independently make intelligent decisions based on the borrower’s previous financing behaviour. Ultimately, the clerk only has to worry about rule-based exceptions. This will relieve the loan officers of many routine tasks, allowing them to spend more time on customer care. Overall, this significantly increases bank productivity.

A further shift in competence results from the fact that the process requires less human control and expertise. As software becomes increasingly knowledgeable, it becomes less dependent on employees. This means that their duties are smaller, but at the same time more responsible.