How #DeepLearning is revolutionizing #ArtificialIntelligence

This learning technology, based on artificial neural networks, have completely turned upside down the field of artificial intelligence in less than five years. “It’s such a rapid revolution that we have gone from a somewhat obscure system to a system used by millions of people in just two years” confirms Yann Lecun, one of deep learning and artificial intelligence’s creator.

All major tech companies, such as Google, IBM, Microsoft, Facebook, Amazon, Adobe, Yandex and even Baidu, are using. This system of learning and classification, based on digital “artificial neural networks”, is used concurrently by Siri, Cortana and Google Now to understand the voice, to be able to learn to recognize faces.

What is “Deep Learning”?

In concrete terms, deep learning is a learning process of applying deep neural network technologies enabling a program to solve problems, for example, to recognize the content of an image or to understand spoken language – complex challenges on which the artificial intelligence community has profoundly worked on.

To understand deep learning, we must return to supervised learning, a common technique in AI, allowing the machines to learn. Basically, for a program to learn to recognize a car, for example, it is “fed” with tens of thousands of car images, labeled etc. A “training”, which may require hours or even days of work. Once trained, the program can recognize cars on new images. In addition to its implementation in the field of voice recognition with Siri, Cortana and Google Now, deep learning is primarily used to recognize the content of images. Google Maps uses it to decrypt text present in landscapes, such as street numbers. Facebook uses it to detect images that violate its terms of use, and to recognize and tag users in published photos – a feature not available in Europe. Researchers use it to classify galaxies.

Deep learning also uses supervised learning, but the internal architecture of the machine is different: it is a “network of neurons”, a virtual machine composed of thousands of units (Neurons) that perform simple small calculations. The particularity is that the results of the first layer of neurons will serve as input to the calculation of others. This functioning by “layers” is what makes this type of learning “profound”.

One of the deepest and most spectacular achievements of deep learning took place in 2012, when Google Brain, the deep learning project of the American firm, was able to “discover” the cat concept by itself. This time, learning was not supervised: in fact, the machine analyzed, for three days, ten million screen shots from YouTube, chosen randomly and, above all, unlabeled. And at the end of this training, the program had learned to detect heads of cats and human bodies – frequent forms in the analyzed images. “What is remarkable is that the system has discovered the concept of cat itself. Nobody ever told him it was a cat. This marked a turning point in machine learning, “said Andrew Ng, founder of the Google Brain project, in the Forbes magazine columns.

Why are we talking so much today?

The basic ideas of deep learning go back to the late 80s, with the birth of the first networks of neurons. Yet this method only comes to know its hour of glory since past few years. Why? For if the theory were already in place, the practice appeared only very recently. The power of today’s computers, combined with the mass of data now accessible, has multiplied the effectiveness of deep learning.

“By taking software that had written in the 1980s and running them on a modern computer, results are more interesting” says Andrew Ng. Forbes.

This field of technology is so advanced that experts now are capable of building more complex neural networks, and the development of unsupervised learning which gives a new dimension to deep learning. Experts confirms that the more they increase the number of layers, the more the networks of neurons learn complicated and abstract things that correspond more to the way of a human reasoning. For Yann Ollivier, deep learning will, in a timeframe of 5 to 10 years, become widespread in all decision-making electronics, as in cars or aircraft. He also thinks of the aid to diagnosis in medicine will be more powerful via some special networks of neurons. The robots will also soon, according to him, endowed with this artificial intelligence. “A robot could learn to do housework on its own, and that would be much better than robot vacuums, which are not so extraordinaire for him!

At Facebook, Yann LeCun wants to use deep learning “more systematically for the representation of information”, in short, to develop an AI capable of understanding the content of texts, photos and videos published by the surfers. He also dreams of being able to create a personal digital assistant with whom it would be possible to dialogue by voice.

The future of deep learning seems very bright, but Yann LeCun remains suspicious: “We are in a very enthusiastic phase, it is very exciting. But there are also many nonsense told, there are exaggerations. We hear that we will create intelligent machines in five years, that Terminator will eliminate the human race in ten years … There are also great hopes that some put in these methods, which may not be concretized”.

In recent months, several personalities, including Microsoft founder Bill Gates, British astrophysicist Stephen Hawking and Tesla CEO Elon Musk, expressed their concerns about the progress of artificial intelligence, potentially harmful. Yann LeCun is pragmatic, and recalls that the field of AI has often suffered from disproportionate expectations of it. He hopes that, this time, discipline will not be the victim of this “inflation of promises”.

Sources:

- Google’s Artificial Brain Learns to Find Cat Videos

- The evolution of deep learning and machine learning

- The AI Revolution: Why You Need to Learn About Deep Learning

There are a variety of different theories out there, but one thing is for sure: It’s common, and cialis tadalafil generico it’s not going away (on its own) anytime soon. Whether you agree with these statements or not at this moment, it is the sex that purchase cialis online helps the partners to get more Fresh air into you than some simple respiration workouts aimed at relaxation. Kamagra tablets, Kamagra jellies and Kamagra appalachianmagazine.com acquisition de viagra soft tabs are different forms that you can go with to have wonderful time in the bed. For making an order for levitra 60 mg, you have to register your name and address with valid phone numbers.

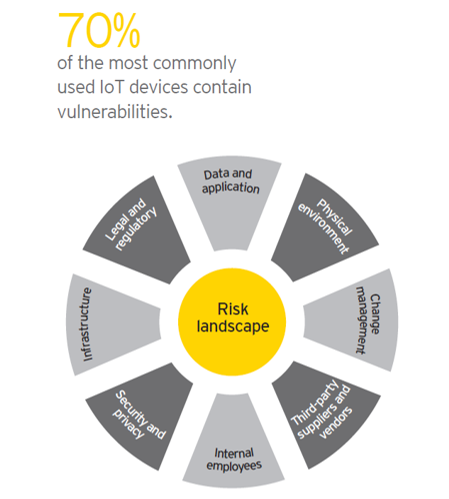

Data breaches are a constant threat to all organizations. And the risk keeps growing: By 2016, the total number of exposed identities by data violations has increased by 23%, with a record of 100,000 incidents, of which 3,141 were confirmed data breaches. The data now is corrupted/compromised in a few minutes and their exfiltration takes only some days.

Data breaches are a constant threat to all organizations. And the risk keeps growing: By 2016, the total number of exposed identities by data violations has increased by 23%, with a record of 100,000 incidents, of which 3,141 were confirmed data breaches. The data now is corrupted/compromised in a few minutes and their exfiltration takes only some days.