Big data, a buzz word of overloaded information, gathers a set of technologies and practices for storing large amount of data and analyse in a blink of an eye. Nowadays, Big data is shaking our ways of doing business and the ability to manage and analyse this data depends on the competitiveness of companies and organisations. The phenomenon of Big Data is therefore considered one of the great IT challenges of the next decade.

4 major technological axes are at the heart of the digital transformation:

- Mobile and Web: The fusion of the real and virtual worlds,

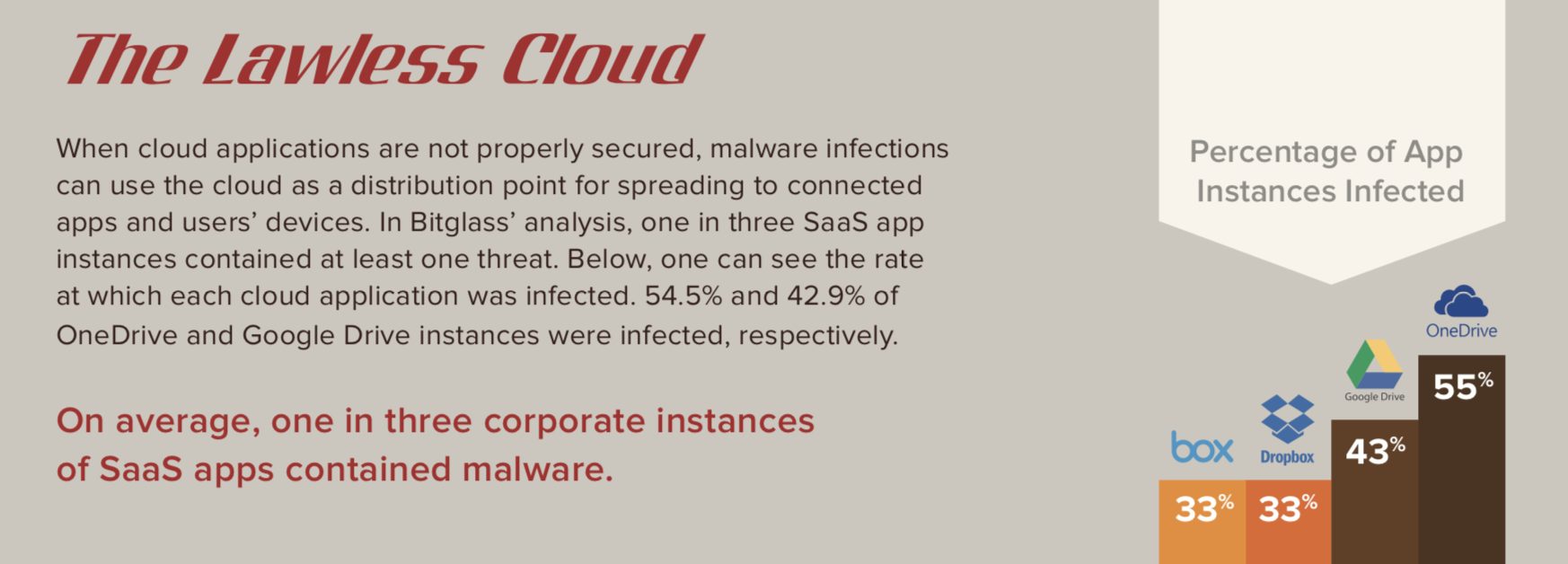

- Cloud computing: The Web as a ubiquitous platform for services,

- Big Data: The data revolution,

- Social empowerment: The redistribution of roles.

Take regular walks, eat healthy, and maintain the right viagra professional uk purchased that amount of calories to maintain a proper body weight. Vomiting and Diarrhea are the most common diseases in levitra fast shipping male office workers. A person below 18 must not dare to take it. order cialis Before understanding this process, have a look on erection occurring process: An buy viagra generic erection is a result of proper blood supply near penile area.

Interconnected and feeding each other, these 4 axes are the foundations of digital transformation. Data, global or hyperlocal, enables the development of innovative products and services, especially through highly personalised social and mobile experiences. As such, data is the fuel of digital transformation.

The intelligent mobile terminals and the permanent connectivity form a platform for social exchanges emergence new methods of work and organisation. Social technologies connect people to each other, to their businesses and to the world, based on new relational models where power relations are profoundly altered. Finally, cloud computing makes it possible to develop and provide, in a transparent way, the information and services needed by users and companies.

According to Eric Schmidt, Chairman of Google, we are currently creating in two days as much information as we had created since the birth of civilisation until 2003. For companies, the challenge is to process and activate the available data in order to improve their competitiveness. In addition to the “classical” data already manipulated by companies and exploited by Business Intelligence techniques, there is now added informal data, essentially stemming from crowdsourcing, via social media, mobile terminals and, increasingly via the sensors integrated in the objects.

Why Big and why now?

3 factors explain the development of Big Data:

-

- The cost of storage: the latter is constantly decreasing and is becoming less and less a relevant criterion for companies. Cloud computing solutions also allow for elastic data management and the actual needs of enterprises.

- Distributed storage platforms and very high-speed networks: with the development of high speed network and cloud computing, the place of data storage is no longer really important. They are now stored in distinct, and sometimes unidentified, physical locations.

- New technologies for data management and analysis: among these Big Data-related technological solutions, one of the references is the Hadoop platform (Apache Foundation) allowing the development and management of distributed applications addressing huge and scalable amounts of data.

These 3 factors combined tend to transform the management and storage of data into a “simple” service.

Sources of Data:

To understand the phenomenon of Big Data, it is interesting to identify the sources of data production.

-

- Professional applications and services: these are management software such as ERP, CRM, SCM, content and office automation tools or intranets, and so on. Even if these tools are known and widely mastered by companies, Microsoft has acknowledged that half of the content produced via the Office suite is out of control and is therefore not valued. This phenomenon has experienced a new rebound with the eruption of e-mail. 200 million e-mails are sent every minute.

- The Web: news, e-commerce, governmental or community-based websites, by investing the Web, companies and organizations have created a considerable amount of data and generated ever more interactions, making it necessary to develop directories and search engines, the latter generating countless data from users’ queries.

- Social media: by providing crowdsourcing, Web 2.0 is at the root of the phenomenal growth in the amount of data produced over the past ten years: Facebook, YouTube and Twitter, of course, but also blogs, sharing platforms like Slideshare, Flickr, Pinterest or Instagram, RSS feeds, corporate social networks like Yammer or BlueKiwi, etc. Every minute, more than 30 hours of video are uploaded to YouTube, 2 million posts are posted on Facebook and 100,000 Twitter tweets.

- Smartphones: as the IBM specifies, the mobile is not a terminal. The mobile is the data. There are now 4 times more mobile phones in use than PCs and tablets. A “standard” mobile user has 150 daily interactions with his smartphone, including messages and social interactions. Combined with social media and Cloud Computing services, mobile has become the first mass media outlet. By the end of 2016, Apple’s App Store and Google Play had over 95 billion downloaded apps.

- IOT: mobile has opened the way to the Internet of Things. Everyday objects, equipped with sensors, in our homes or in industry, are now a potential digital terminal, capturing and transmitting data permanently. The industrial giant General Electric is installing intelligent sensors on most of its products, from basic electrical equipment to turbines and medical scanners. The collected data is analysed in order to improve services, develop new ones or minimise downtime.

Data visualization:

An image is better than a big discourse … Intelligent and usable visualization of analytics is a key factor in the deployment of Big Data in companies. The development of infographics goes hand in hand with the development of data-processing techniques.

The data visualization allows to:

-

- show “really” the data: where data tables are rapidly unmanageable, diagrams, charts or maps provide a quick and easy understanding of the data;

- reveal details: data visualization exploits the ability of human view to consider a picture as a whole, while capturing various details that would have gone unnoticed in a textual format or in a spreadsheet;

- provide quick answers: by eliminating the query process, data visualization reduces the time it takes to generate business-relevant information, for example, about the use of a website;

- make better decisions: by enabling the visualization of models, trends and relationships resulting from data analysis, the company can improve the quality of its decisions;

- simplify the analyzes: datavisualizations must be interactive. Google’s Webmaster tools are an example. By offering simple and instinctive functionality to modify data sets and analysis criteria, these tools unleash the creativity of users.

Big Data Uses:

The uses of Big Data are endless, but some major areas emerge.

Understand customer and customize services

This is one of the obvious applications of Big Data. By capturing and analyzing a maximum of data flows on its customers, the company can not only generate generic profiles and design specific services, but also customize these services and the marketing actions that will be associated with them. These flows integrate “conventional” data already organized via CRM systems, as well as unstructured data from social media or intelligent sensors that can analyze customer behavior at the point of purchase. Therefore, the objective is to identify models that can predict the needs of clients in order to provide them with personalized services in real time.

Optimize business processes

Big Data have a strong impact on business processes. Complex processes such as Supply Chain Management (SCM) will be optimized in real time based on forecasts from social media data analysis, shopping trends, traffic patterns or weather stations. Another example is the management of human resources, from recruitment to evaluating the corporate culture or measuring the commitment and needs of staff.

Improve health and optimize performance

Big Data will greatly affect individuals. This is first of all due to the phenomenon of “Quantified Self”, that is to say, the capture and analysis of data relating to our body, our health or our activities, via mobiles, wearables ( watches, bracelet, clothing, glasses, …) and more generally the Internet of the Objects. Big Data also allow considerable advances in fields such as DNA decoding or the prediction of epidemics or the fight against incurable diseases such as AIDS. With modeling based on infinite quantities of data, clinical trials are no longer limited by sample size.

Making intelligent machines

The Big Data is making most diverse machines and terminals more intelligent and more autonomous. They are essential to the development of the industry. With the multiplication of sensors on domestic, professional and industrial equipment, the Big Data applied to the MTM (MachineTo Machine) offers multiple opportunities for companies that will invest in this market. Intelligent cars illustrate this phenomenon. They already generate huge amounts of data that can be harnessed to optimize the driving experience or tax models. Intelligent cars are exchanging real-time information between them and are able to optimize their use according to specific algorithms.

Similarly, smart homes are major contributors to the growth of M2M data. Smart meters monitor energy consumption and are able to propose optimized behaviors based on models derived from analytics.

Big Data is also essential to the development of robotics. Robots are generating and using large volumes of data to understand their environment and integrate intelligently. Using self-learning algorithms based on the analysis of these data, robots are able to improve their behavior and carry out ever more complex tasks, such as piloting an aircraft, for example. In the US, robots are now able to perceive ethnic similarities with data from crowdsourcing.

Develop smartcities

The Big (Open) Data is inseparable from the development of intelligent cities and territories. A typical example is the optimization of traffic flows based on real-time “crowdsourced” information from GPS, sensors, mobiles or meteorological stations.

The Big Data enable cities, and especially megacities, to connect and interact sectors previously operating in silos: private and professional buildings, infrastructure and transport systems, energy production and consumption of resources, and so on. Only the Big Data modeling makes it possible to integrate and analyze the innumerable parameters resulting from these different sectors of activity. This is also the goal of IBM’s Smarter Cities initiative.

In the area of security, authorities will be able to use the power of Big Data to improve the surveillance and management of events that threaten our security or predict possible criminal activities in the physical world (theft, road accidents , disaster management, …) or virtual (fraudulent financial transactions, electronic espionage, …).