Corporate Passwordless Authentication: Issues to address before getting onboard

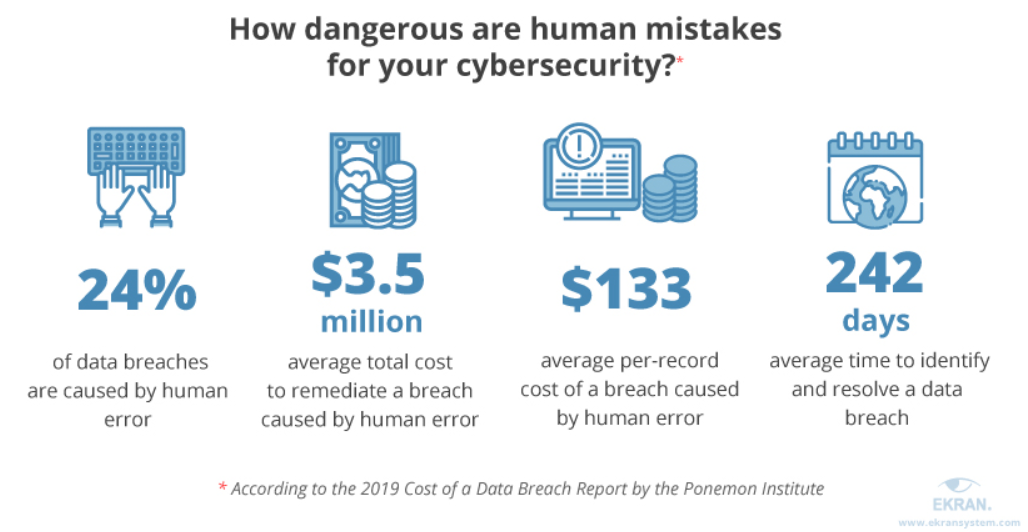

With remote work, security breaches are gaining increased attraction, with passwords being the root of the problem, leading to massive financial damage and a loss of image for the companies concerned. According to the Verizon 2021 Data Breach Investigations Report, credentials are the primary means by which a bad actor hacks into an organization, with 61 percent of breaches attributed to leveraged credentials. passwords with privileged access to organizational systems and networks are targets for hackers since they’re able to get so much information from just one singular source.

These numbers make you sit up and take notice and are one of the reasons why many companies are currently looking into the advantages of a secure passwordless Authentication future. Secure logins without a password not only bring companies cost savings and smoother user logins. They also provide security as millions of employees continue to work remotely, even after the pandemic has passed.

One company or another may have already planned its passwordless strategy and are now ready to implement it. As many companies know, going password-free is more of a journey than a destination. In any case, what companies can do is make sure they don’t hit any deep potholes so that this journey runs as smoothly as possible.

The journey towards a passwordless strategy is different for every company. For example, organizations could implement a passwordless smart card approach, a passwordless FIDO2/WebAuthn approach, or even a hybrid approach that combines both approaches to meet diverse business needs. But no matter which path companies take, there are some common pitfalls that can be avoided if they are known.

- When it comes to implementations, a passwordless solution can involve multiple products installed at various times for different user levels. The journey towards passa wordless future isn’t always swift. Some obstacles may appear along the way. So, companies can’t necessarily see the entire route, but they can be prepared for whatever awaits them beyond the potential obstacle. Specifically, companies should start embarking with the most sensitive, important, and critical use cases and user groups and then gradually expand the passwordless implementation.

- Passwordless is not just an IT implementation. Since it can significantly change the corporate culture and processes, every department within the company must be included. If the roadmap is narrow or created by only one department, the likelihood of it failing once it must be communicated to users, HR, and senior management increases. The key to success is taking an integrated approach and involving all key stakeholders from the start to achieve maximum user adoption. This is exactly what improves the security level of the entire company.

- When technical teams lead a project, communication with users and user training are often forgotten. The communications or training team must plan live or virtual events, build positive expectations and make the innovative solutions and processes easier to understand for the rest of the employees.

- IT teams must verify at the earliest stage that the planned passwordless implementation will work as expected across all key systems, use cases, and users. It’s logical to set up a test environment that demonstrates the end-to-end connectivity between the existing systems and the authentication technology for the most important users/user groups and check whether their defined success criteria can be met.

Conclusion

Since using passwords incorrectly often represents an enormous security risk, more and more companies are looking for alternatives and would like to make passwordless login the standard. It is important to know that this change cannot and should not happen overnight – it is also important to choose a holistic, well-founded path, because only then does the change really bring the maximum IT security. If companies are aware of some common switching pitfalls and consciously avoid them, they will be well on their way to a future without passwords.