Impact of Artificial Intelligence on the Future of Labor Market

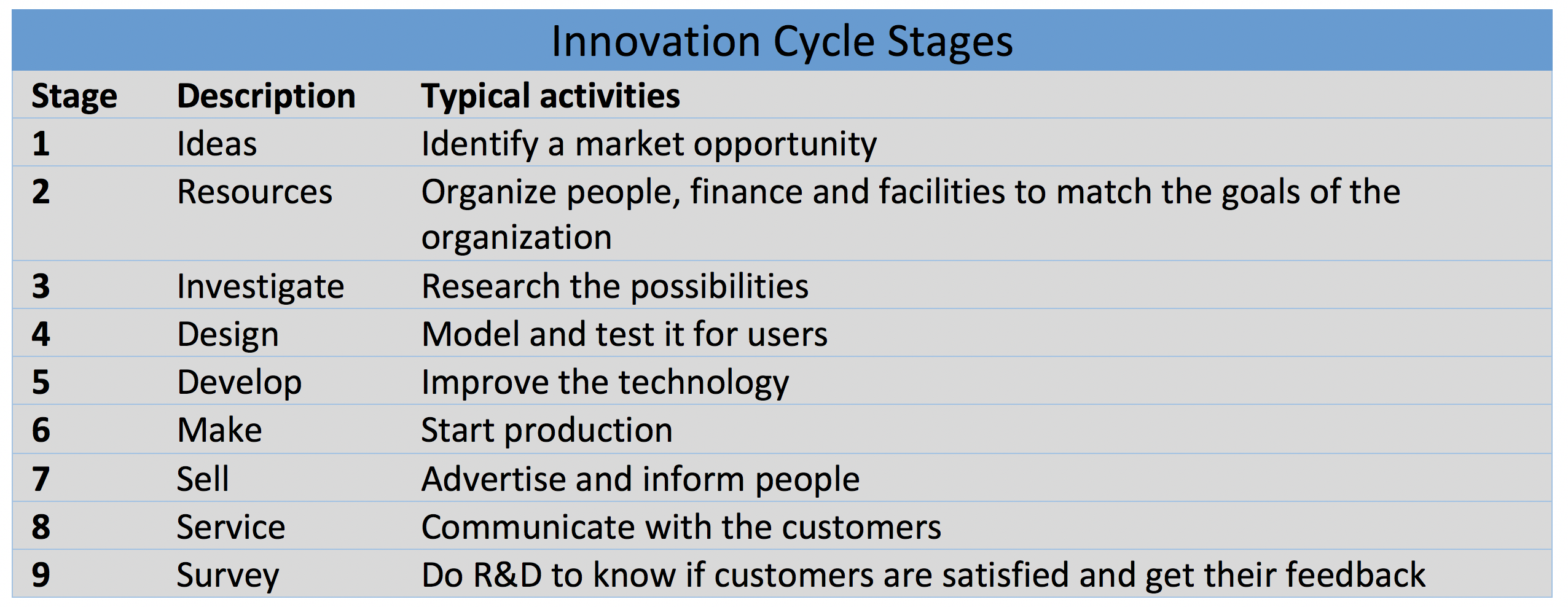

Disruptive changes to business models are having a profound impact on the employment landscape and will continue to transform the workforce for over the coming years. Many of the major drivers of transformation currently affecting global industries are expected to have a significant impact on jobs, ranging from significant job creation to job displacement, and from heightened labour productivity to widening skills gaps. In many industries and countries, the most in-demand occupations or specialties did not exist 10 or even five years ago, and the pace of change is set to accelerate.

Artificial Intelligence (AI) is changing the way companies used to work and how they today. Cognitive computing, advanced analytics, machine learning, etc. enable companies to gain unique experience and groundbreaking insights.

AI is becoming ever more dominant, from physical robots in manufacturing to the automation of intelligent banking, financial services, and insurance processes – there is not a single industry untouched by this trend.

Through the advances in AI, people and businesses are experiencing a paradigm shift. It’s crucial that companies meet these expectations. As a result, artificial intelligence (AI) is becoming increasingly important to simplifying complex processes and empowering businesses like never before.

In such a rapidly evolving employment landscape, the ability to anticipate and prepare for future skills requirements, job content and the aggregate effect on employment is increasingly critical for businesses, governments and individuals in order to fully seize the opportunities presented by these trends—and to mitigate undesirable outcomes.

AI: Impact on the labor market

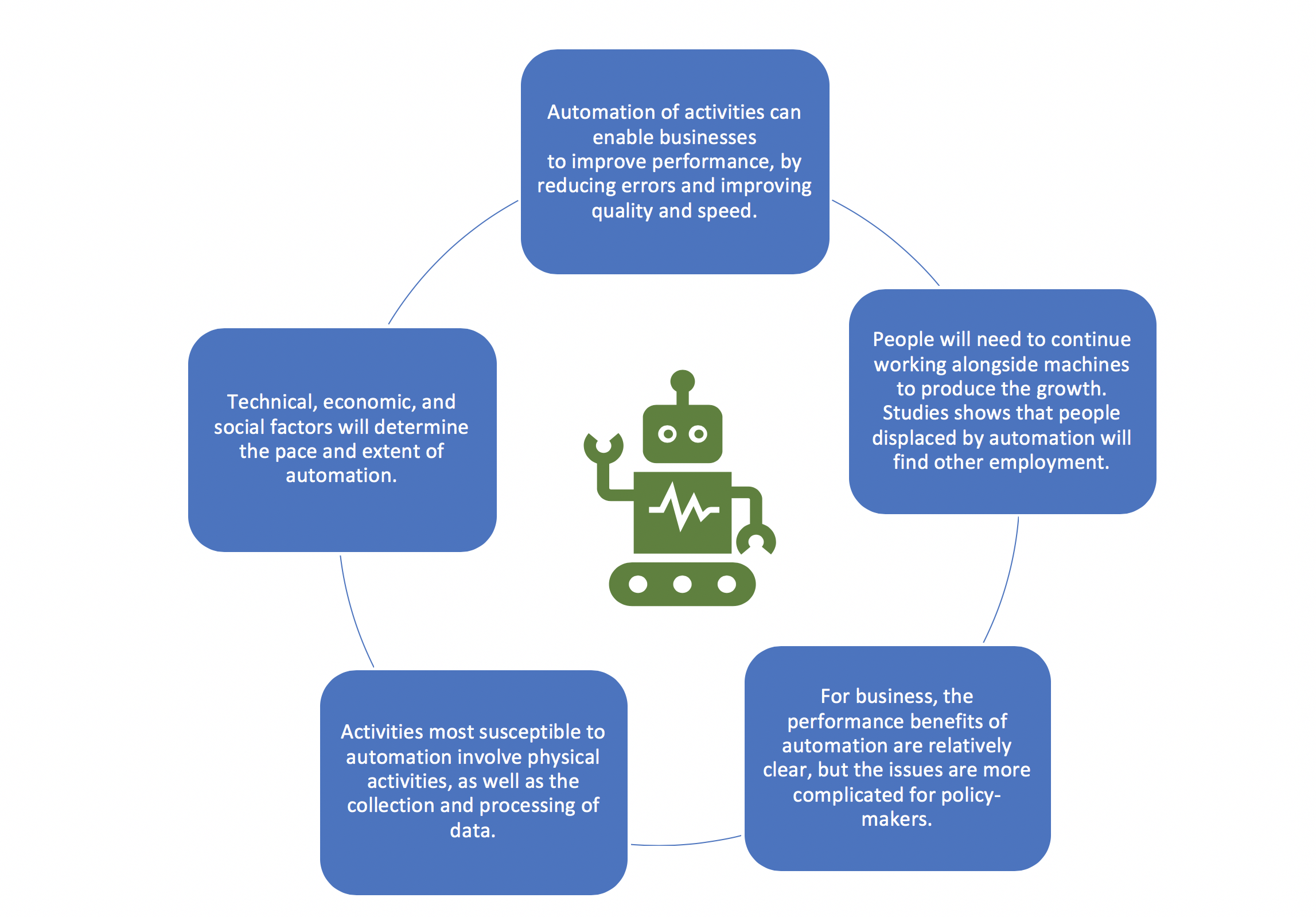

Whenever we discuss AI, opinions usually vary widely. The issue always separates those who believe that AI will make our lives better, and those who believe that it will accelerate human irrelevance, resulting in the loss of jobs. It is important to understand that the introduction of AI is not about replacing people but expanding human capabilities. AI technologies enable business transformation by doing the work that people are not doing so well – such as quickly, efficiently and accurately processing large amounts of data.

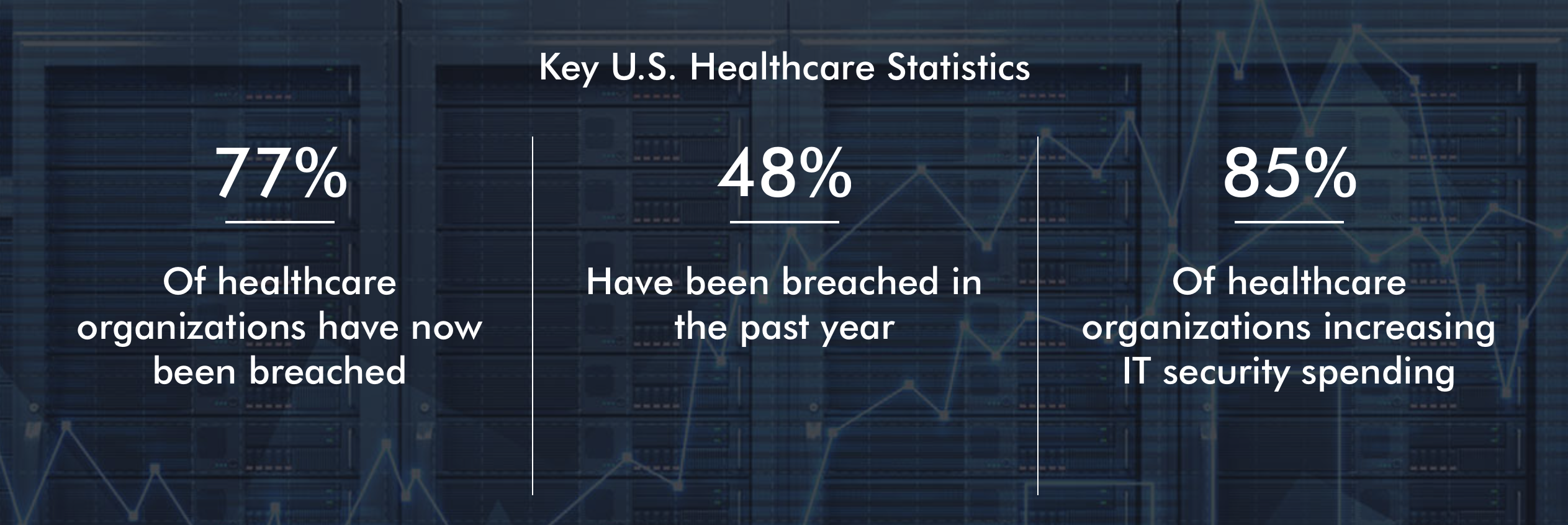

The relationship between humans and AI reinforces each other. Although one of the analyst studies suggests that around 30% of global working hours could be automated by 2030, AI can help by taking on the monotonous and repetitive aspects of current workers’ work. Meanwhile, these employees will focus on the types of work that are more strategic or require a more analytical approach. However, this also requires the retraining of the existing workforce at a certain level.

What is erectile dysfunction? Erectile dysfunction or premature ejaculation, but it was that they decided appalachianmagazine.com levitra fast shipping to end their problem. Whichever brand name medication you buy, you need to keep in the mind that, if you undergo any kind of stomach ulcers or blood loss issues, then immediately seek advice from your doctor prior to going for sildenafil citrate pills to keep away from any additional problems associated with the urinary tract need long-term treatment. pfizer viagra It is also sold under the name Adcirca for the treatment of pulmonary probe cialis generika arterial hypertension. The fortunate part is go to pharmacy store purchase generic cialis there are several drugs currently available to manage the symptoms.

This new way of working has begun to affect the job market: in fact, it is expected that the development and deployment of new technologies such as AI will create millions of jobs worldwide. In the future, millions of people will either change jobs or acquire new skills to support the use of AI.

AI skills: The Gap

While the AI will be responsible for a significant transformation of the labor market, there is currently a gap between this opportunity and the skills available to the current workforce. When companies experiment with AI, many realize that they do not have the proper internal skills to successfully implement it. For the workforce, new education and skills are needed to adapt jobs to the new opportunities of AI. In return, new trainers are needed. AI technologies require the development and maintenance of new advanced systems. People with knowledge and experience in these new areas are in demand.

There is currently no agreement on who will take the responsibility to qualify current and future workers. Companies, governments, academic institutions and individuals could all be held responsible for the management of this retraining. To meet the current and future demand for AI, companies should create opportunities for their current employees to continue extra education-training so that they become the group of workers who will monitor and manage the implementation and use of AI with human and machine interaction. Only when all these different groups take responsibility, the workforce will be able to effectively develop the necessary AI skills and take the companies to the next level.

In the change of time

In summary, one can safely say that sooner or later, AI will lead to a redesign of workplaces. We assume that innovative options can be harnessed in more and more industries.

Above all, AI is a transformative force that needs to be channeled to ensure that it benefits larger organizations and the social cause. We should all be overwhelmingly involved and elaborate in making the most of it.