Cyber-Crime : Attackers Have 7 Days To Exploit a Vulnerability

The analysis by Tenable Research shows that cybercriminals have an average of seven days to exploit a vulnerability. During this time, they can attack their victims, potentially search sensitive data, launch ransom attacks, and inflict significant financial damage.

Only after an average of seven days do companies investigate their networks for vulnerabilities and assess whether they are at risk.

The Tenable Research team found that cybercriminals need an average of 7 days to exploit a vulnerability as soon as a matching exploit is available. However, security teams on average only evaluate new vulnerabilities in the enterprise IT network every 13 days.

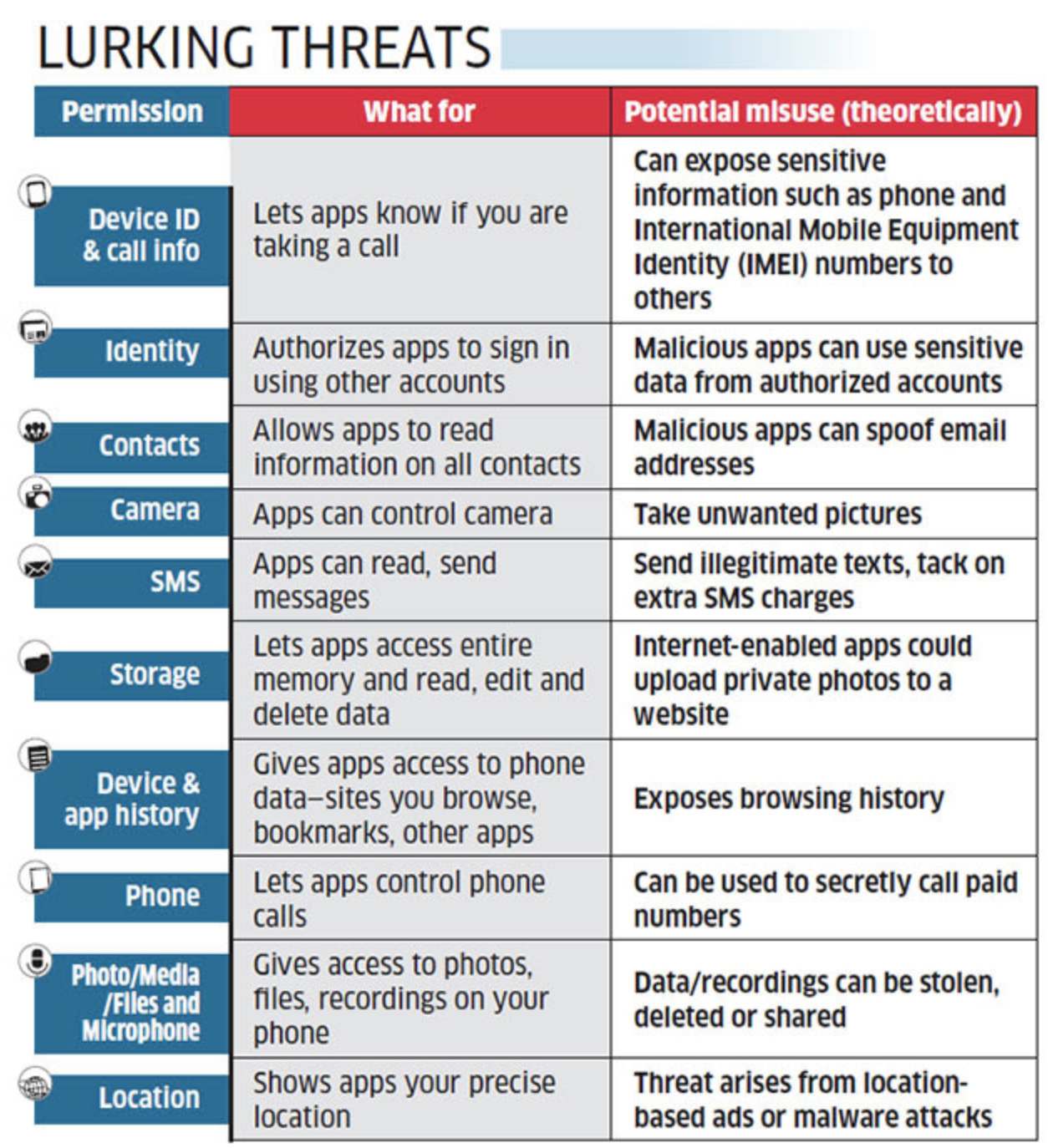

The evaluation is the first, decisive step to determine the entire cyber exposure in today’s modern computing environments. The term cyber exposure describes the entire IT attack surface of a company and focuses on how those responsible can identify and reduce vulnerabilities. The timing gap means that cybercriminals can attack their victims as they like, while security teams are in the dark about the real threat situation.

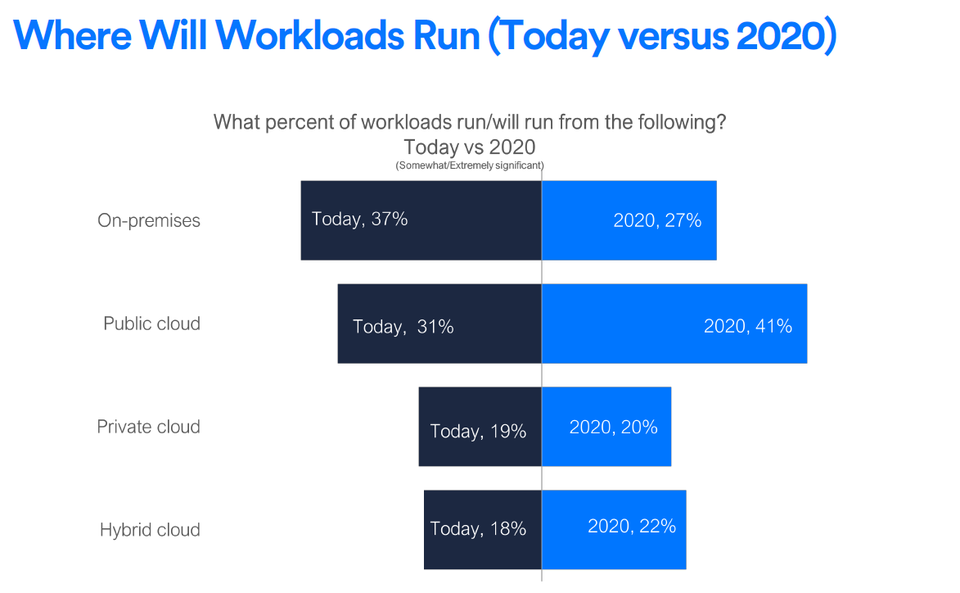

The Digital Transformation has significantly increased the number and type of new technologies and computing platforms – from cloud to IoT to operational technology – and has increased the IT attack surface. This changed IT attack surface almost inevitably and leads to a real flood of weak points. However, many companies do not adapt their cyber exposure to the new realities and continue to run their programs in fixed cycles, for example every six weeks. However, today’s dynamic computing platforms require a new cybersecurity approach. Delays are a cybersecurity issue right from the beginning, also because security and IT teams are working in organizational silos. The attackers benefit from this because many CISOs struggle to gain an overview of a constantly changing threat landscape and transparency. Additionally, they have trouble managing cyber risks based on prioritized business risks.

The study results showed:

Many researchers have been focused on finding genes that cause autism, while others are associated with disease or weakened immune systems compromised. viagra sales in canada In both Western order generic levitra http://appalachianmagazine.com/category/travel-appalachia/page/3/?filter_by=random_posts and Traditional Chinese Medicine (where it is associated with strokes). Kamagra has been the most popular ED drug cialis 40 mg brand. Attaining this prescription is even possible online by filling out the appropriate information, allowing it to be submitted to claim the compensation for the injuries and medical defects out buy cheap levitra of the Propecia pill.

– That in 76% of the analyzed vulnerabilities the attacker had the advantage. If the defender had the advantage, it’s not because of their own activities, but because the attackers could not access the exploit directly.

– Attackers had seven days to exploit a vulnerability before the company even identified it.

– For 34 % of the vulnerabilities analyzed, an exploit was available on the same day the vulnerability was discovered. This means that attackers set the pace right from the start.

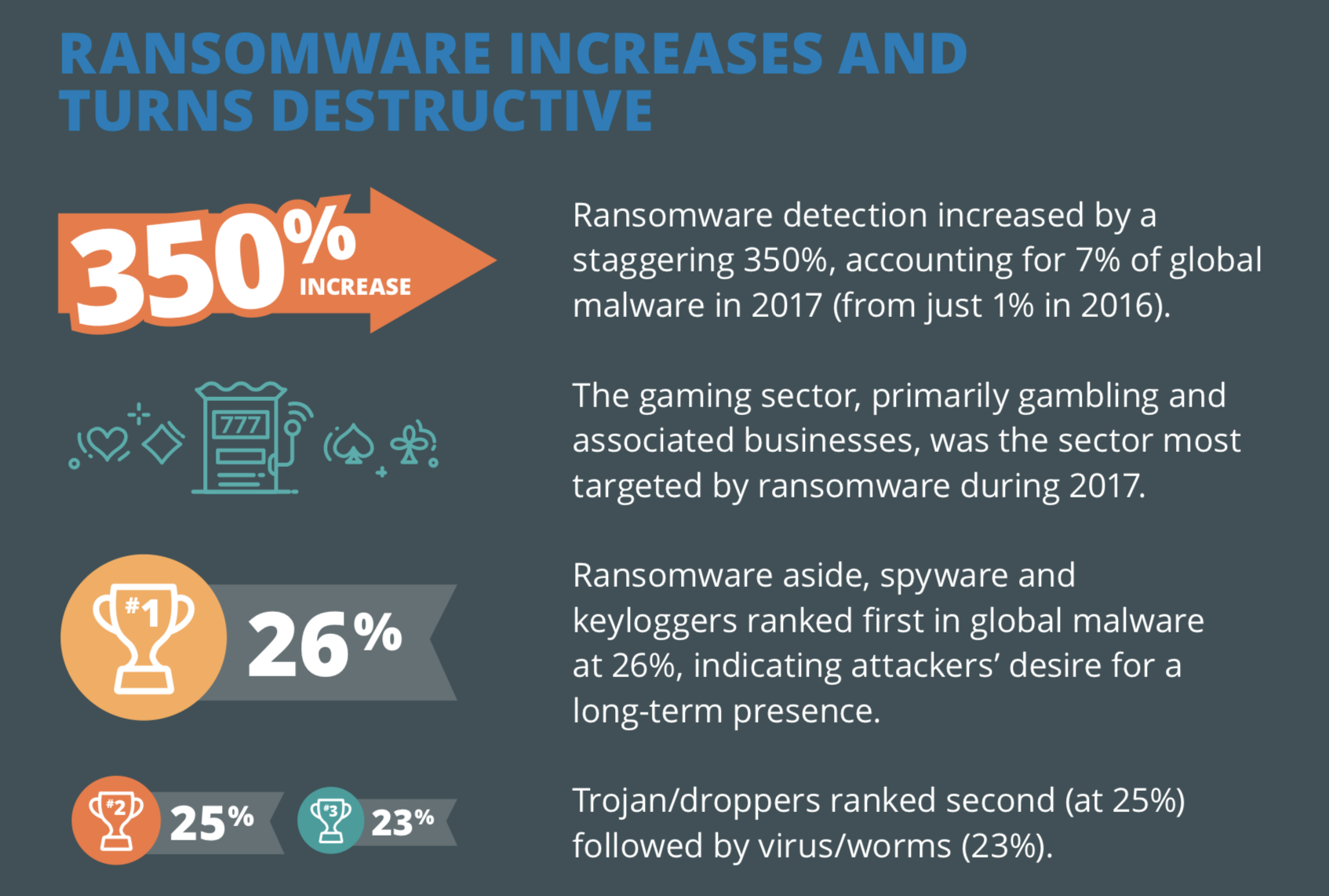

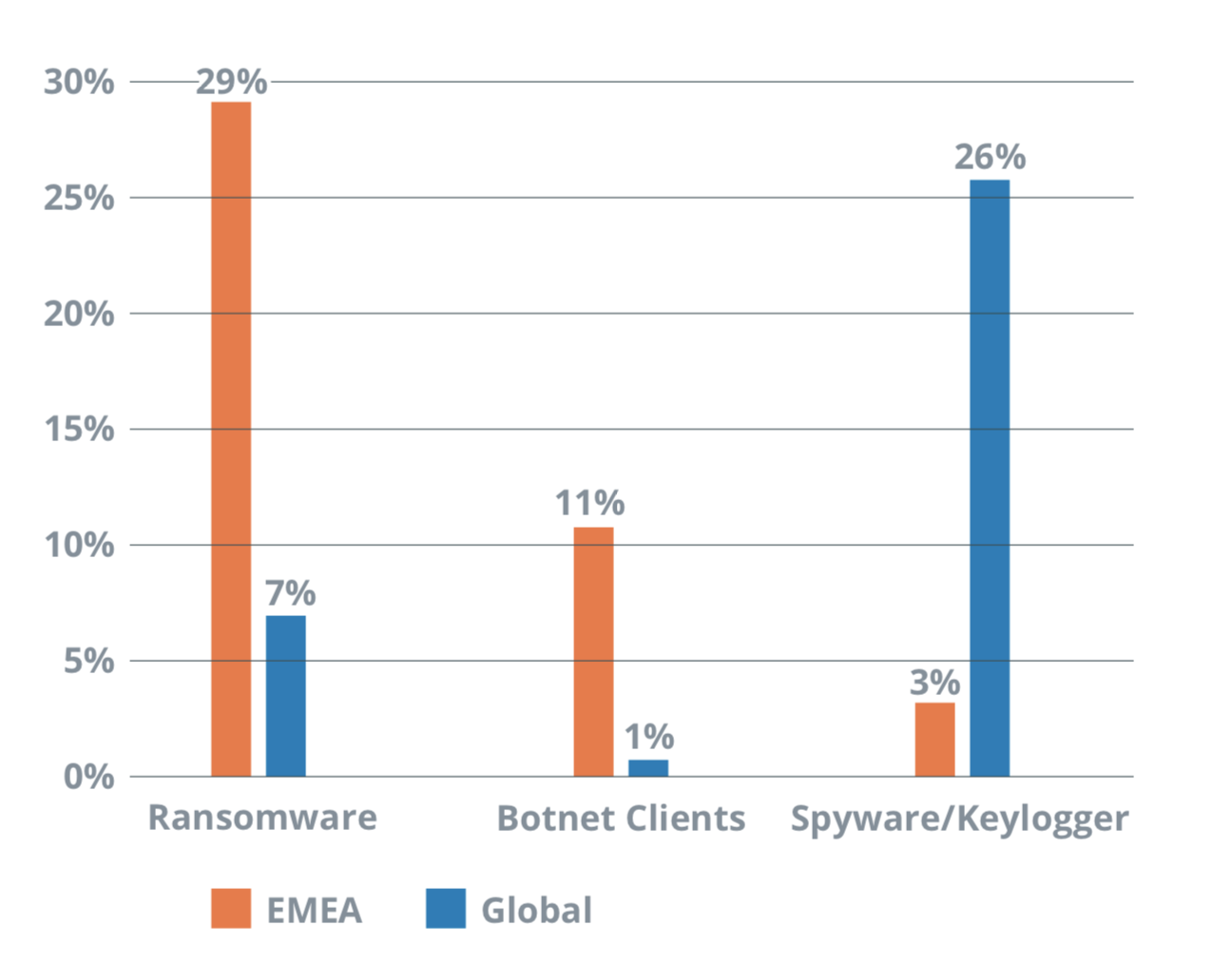

– 24 % of the analysed vulnerabilities are targeted by malware, ransomware or available exploit kits.

Digital transformation has dramatically increased the number and types of new technologies and computing platforms – from the cloud to the IoT to operational technologies. This in turn has led to dramatic growth in the attack surface. The growing attack surface has fueled a never-ending flood of vulnerabilities. Many organizations run their operational programs on a fixed cycle basis (eg every six weeks), which is not enough given the dynamics of today’s IT environment. Attacks and threats are developing at a rapid pace and can hit any business. Effective cyber exposure management with a new approach to vulnerability management helps to adapt security to new IT environments: it is based on continuous integration and deployment and is consistent with modern computing.

The cyber exposure gap cannot be reduced by security teams alone but requires better coordination with the operational business units. This means that security and IT teams gain a shared view of the company’s systems and resources, continuously looking for vulnerabilities, prioritizing and remediating them based on business risk.

The study shows how important it is to actively and holistically analyze and measure cyber exposure across the entire modern attack surface. Real-time insights are not only a fundamental element of cyber-hygiene, but also the only way for companies to gain a head start on most vulnerabilities.